Experienced authors want the best editors and reviewers to work on their manuscripts. The work of these professionals is valuable for improving the content. How does an independent author who may work alone, and doesn’t have a big budget get help like established authors? An author has created an online feedback toolkit that he already has used for his own book.

Matthew J. Salganik, a professor of sociology at Princeton University, started writing a book Bit by Bit: Social Research in the Digital Age when he realized he should actually do what he was writing about. The book is about new possibilities the digital world opens for researchers.

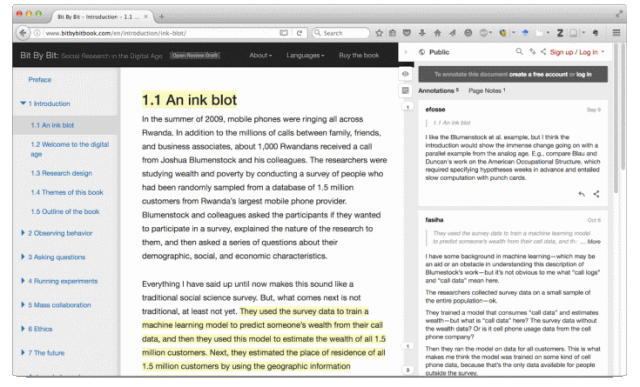

He created a web site where he posted his manuscript that he was working on for anyone to see. Not only that, he also integrated a tool, hypothes.is, that allowed anyone to annotate his manuscript. All visitors could see both the manuscript and the annotations.

When Salganik believed he had enough public feedback and closed the comments, he had received 495 annotations from 31 people. That’s pretty good, and probably means that he has spread the word to his contacts that they should visit his review site and contribute their feedback.

Salganik regards the annotations extremely helpful that allowed him to improve his manuscript. The author sees the annotations as complementary elements to his work, which is different to results of an average peer review. He says the feedback was focused on helping him write the book that he wanted to write, and didn’t lead to major changes. The annotations were often focused on improving specific sentences.

In addition to the feedback itself, Salganik’s web site collected statistics that reveal interesting things about the process.

Most feedback was given by a handful of reviewers. Although the author managed to get comments from a good number of people, most of them left a comment only.

The reviewers were most active early in the process, right after the author opened the manuscript for review.

The review process motivated the author to carry on writing the book.

The author could collect an email address list of people who visited the review site.

After the review was closed, the author realized he should have integrated an automatic page visit analysis to the system. He would have been able to get statistics on the sections that were the most and least read.

Salganik has published the toolkit he used for his review process as an open source software package at Open Review Toolkit. Technical skills are required to setup a working online review system for a manuscript.

Other systems, often based on Wiki software have also been used for developing a manuscript in public, and accepting feedback during the process. Not all people find Wiki-based systems user-friendly, so perhaps a dedicated toolkit for reviews can help authors.